Microsoft 365 Copilot is quickly becoming a default way for people to write, summarize, search, and make decisions inside Microsoft 365. Microsoft says nearly 70% of the Fortune 500 use Microsoft 365 Copilot. And more broadly, the Work Trend Index reports that 75% of knowledge workers use AI at work, with 78% bringing their own AI tools ("BYOAI").

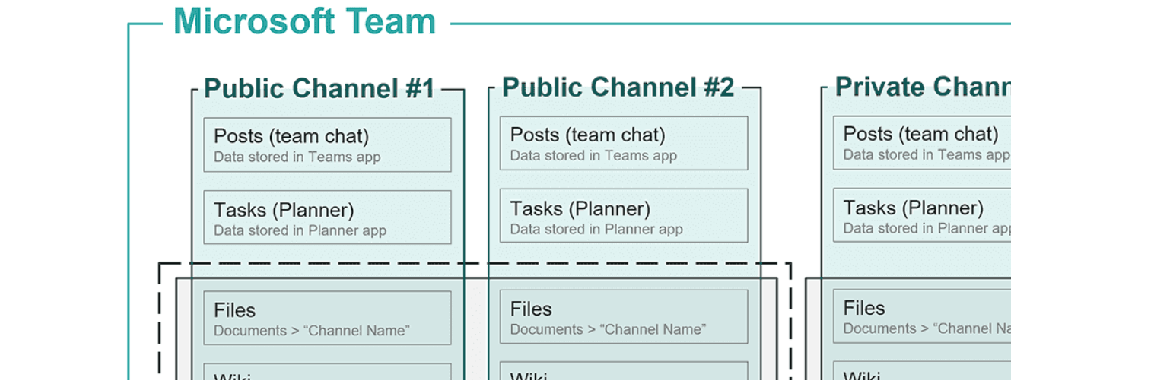

Copilot isn't a single app. It's integrated across Microsoft 365 experiences, including Word, Excel, PowerPoint, Outlook, Teams, Loop, Entra, and Copilot Chat for cross-app prompting.

In the same environment, heavy Microsoft 365 users can see 250+ emails per day and 150+ chats per day, while Teams meetings per person are up 3x since 2022. Based on a randomized field experiment of 6,000+ workers across 56 firms, Copilot users spent 18% less time reading email and completed documents 12% faster. But Copilot doesn't just help, it also creates a growing stream of AI interaction data across the business.

Under the hood, Copilot is grounded in organizational context through Microsoft Graph and draws on everything a user is permitted to access, including sensitive corporate records and the personal data of customers and partners.

That's the key point: Copilot isn't generating random text. Copilot is producing work based on real business data and real user activity, so it must be treated as part of the organization's compliance scope.